Supply, Demand, AI and Humans

The effects of AI, like everything else, depend on both supply and demand. A prelude, and then a parable.

As I have been reading and discussing large language models, I find I’ve learned as much about us humans as I have about the AI that replicates some of what we do. Introspecting, am I really that much more than a large language model?

I recognize that I have around a thousand stories. Most of my conversation and writing, especially for this blog, opeds, interviews, and discussions, consists of prompts which lead to one of those prepackaged stories. A given prompt could easily lead to a dozen different stories, so for a while I give the illusion of freshness to someone (not my wife and kids!) who hasn’t been around me that long. House prices are high in Palo Alto, should the government subsidize people to live here? Let me tell you about the vertical supply curve. Almost all of my stories are not original. I do a lot of reading and talking, so I pick up more stories about public policy than most people who have real jobs and pick up stories about something else. Learning and education are largely formal training to learn more stories in response to prompts.

I’ve been thinking about programming a Grumpy Economist bot. Training an AI on the corpus of my blog, opeds, teaching, and academic writing would probably give a darn good approximation to how I answer questions, because it’s a darn good approximation to how I work.

It turns out I’m late to the game, David Beckworth has already programmed up an AI version of himself, in the “macro musings” bot. It does a particularly good job when asked about theories of inflation

(Full tweet here.) The bot seems to be trained on a larger dataset as it seems more FTPL enthusiastic than David! A “what would Milton Friedman say?” bot is a fun idea. (Update: The Salem Center headed by Carlos Carvalho already built the Milton Friedman AI: https://friedman.ai/)

Now, not everything I do is complete recycling, predictable from my large corpus of rambling or from training on things I’ve read or conversations I’ve had. Every now and then, someone asks me a question I don’t have a canned answer to. I have to think. After a week or two, I create a new story. A great economist asked me for intuition about how interest rates could raise inflation. It took a week. I now have a good story, p. 13 of the new “expectations and neutrality.” Walking back to Hoover from a seminar, Bob Hall asked me how government bonds could have such low returns if they are a claim to surpluses since surpluses like dividends are pro-cyclical. The “s-shaped surplus” business and a whole chapter of FTPL emerged after a few weeks of rumination. It’s a a new story, which I tell often (perhaps too often, some of my colleagues might complain at this point.) Newton and Einstein created new stories. This creativity seems like the human ability that AI will have a long hard time replicating, though perhaps I’m deluding myself on just how original my new stories are. When I get that AI programmed up, I’ll ask it the next puzzle that comes along.

I also read, I talk to people like my Goodfellows, I pick up new stories, and they go into the corpus. Just yesterday I visited Chicago and had some wonderful conversations that produced new stories, ready to repeat. From one economist: Uber gave in to pressure and allowed tips on the platform. In the short run, drivers loved it because their wages went up. But that brought in more drivers, which led to longer wait times and the base pay going down. In the end the average price and driver wage are right back where they were before. What a lovely story of supply, demand, pay attention to the equilibrium response. (A lot of my stories on this blog have that theme.)

AI can do that excellently, by training on new stories. But I wonder if it would pick up as salient and memorable the same ones I would. I love “you forgot the supply curve” or '“you forgot the behavioral response” stories. (The first story I learned that turned me to an economist: The region of Tuscany had a problem with vipers, poisonous snakes. It offered a $1 bounty for each dead viper. Guess what happened next? Hint, it’s cheap to raise vipers by the thousands.) Can AI understand to train itself on new stories in a productive direction, not just picking new ones that reinforce old prejudices but not going off on a left wing social justice rant either?

Our social and professional networks are also key ingredients to filtering and passing on new stories. People who tell good stories get higher priority nodes in my network. There is nothing I enjoy more than being proven wrong, than having a previous story flatly contradicted and forming a new one. If AI is going to be like humans, it needs clubs.

I also forget stories. I recently spent a week inventing a new calculation and then I found I had already done it 20 years ago in a published paper. I’ve forgotten a lot of the physics I loved and studied so hard in college. AI might not forget. But then again it might. I gather that as it trains on new data it tends to get worse on old data. Maybe some forgetting is optimal. If nothing else it’s a way not to repeat the same story too often!

This preamble leads me to recognize a business that will certainly be greatly influenced by large language models — the writing of blog posts, opeds, giving of interviews, and so forth. If 90% of what I do can be replicated, what does that mean for this business?

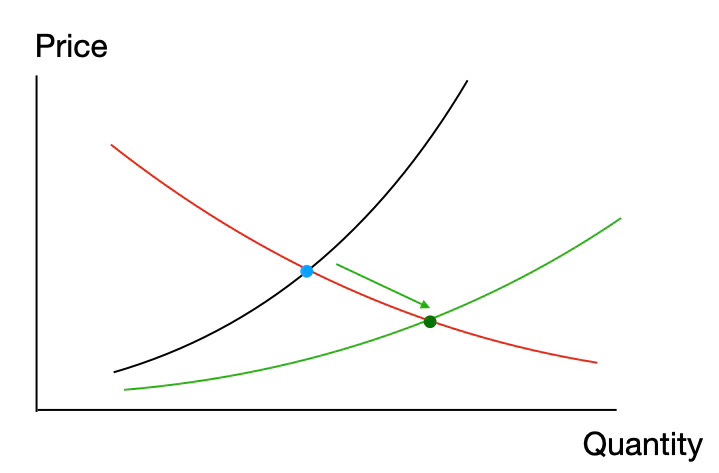

Your natural instinct might be, this business is toast and will be totally displaced by automation. Not so fast. Here is a new story for you. Look at supply and demand:

In the upper supply curve (rising to the right) I have the supply of commentary, and where it intersects today’s demand for commentary. LLMs push the supply curve down and to the right, as shown by the green arrow and the new supply curve. I could certainly write more blog posts faster if I at least started with the bot and then edited. (I may try, but that leads to an investment question. The human needs to invest time to get the bot running.) A colleague who is further ahead in this process reports that he routinely asks Claude.ai to summarize each academic paper in a 600 word oped, and he has found lately that he doesn’t need to do any editing at all! It’s both down and to the right however. We could produce more for the same cost in time, or we could write each one faster.

Does that mean that the commentary business will end because the price will crash? Just asking the question in the supply and demand context already tells you the answer is no. At a lower price, there is more demand, so the quantity expands. This could be the golden age of commentary! Indeed, quantity could expand so much that the total revenue (price times quantity, the size of the box from the origin to the supply-demand circle) could actually increase!

This has happened many times before. Moveable type (Gutenberg) lowered the price of books. Did bookselling crash, and the monks starve? No, demand at a lower price was so strong that bookselling took off, and more people made more money doing it. (Though, as always, perhaps different people. The monks went on to other pursuits than copying manuscripts.) The printing press, radio, TV, movies, and the internet each had the same effect.

It’s not so obvious though that the demand for commentary is that flat. My inbox is overwhelmed with interesting looking blog posts already, and there are about 50 tabs open on my browser as I write with more fascinating articles that I have not read. Related, the “price” in my graph, at least for this blog, is the price of my time to produce it and the price of your time to read it. AI lowers the price for me, but not for you.

Now, what you need is an assistant, who knows you and can read through all the mass of stuff that comes in and select and summarize the good stuff. That too is a task that AI seems like it might be pretty good at. There’s a joke (here comes another story I picked up somewhere) that Joe says “look how great the AI is, I can input four bullet points and a whole power point presentation comes out!) Jane, getting the powerpoint presentation says, “look how great the AI is, it boiled down this whole long powerpoint into four bullet points!” If anyone has a good AI going, I would be curious to read a comment on how your AI summarizes this blog post in a paragraph. But like my collecting of new stories examples, it has to somehow know which stories are going to ring with you. Current algorithms are said to be pretty good, often too good, at feeding you what you like, but I want new things that expand my set of stories, and best of all the rare ones that successfully challenge my beliefs.

Indeed, perhaps AI will be more useful as digestion for information overflow than for producing even more. I long wondered, what’s the point of a lecture, when you can just read the book? What’s the point of a seminar, when you can just read the paper? I think the answer is digestion. A lecture forces the professor to say what he can in an hour. That’s a very short time, at best 10,000 words. Professors, at least in economics, notoriously assign endless reading lists, that nobody could get through in a decade. They can’t break the 1 hour limit. They can be more or less effective at digestion, and lose everyone or keep it digestible. Similarly, a good seminar with an audience that won’t let you show slides of equations like a movie forces digestion.

In sum, perhaps AI will also help on the demand side, shifting demand to the right as well.

Commentary is also a quality question, not just a quantity question. Most commentary is pretty awful. Humans are not that good at critical reading, sticking to the point, logical continuity, avoiding whatabout, ad-hominem, or other pointless arguments, remembering basic facts, actually answering questions and so on. At least not the ones on my twitter stream. AI editing might dramatically improve the quality of commentary. Just getting it from C- to B+ would be a great improvement. In many areas—nurse practitioners in rural Africa is another story I just read—offering free B+ skilled companions to people doing jobs like this would be a boon.

Conversely, as with all technology, for the foreseeable future there will be a need for humans to edit the AI, figure out what prompts to send that will most interest readers, recommend and certify AI produced material, and so on. The introduction of ATM machines increased bank employment, by making it easier to open bank branches and offer (overpriced) financial services. (You’ve probably heard that story. Time to stop telling it.) Humans move to the high value areas.

I also learn a lot by writing. When I write a blog post like this one, I have to think things through, and often the underlying story gets clearer, or I realize it was wrong. If the AI writes all by itself, nether of us is going to get any better. But perhaps the editing part will be just as useful as my slow writing. I learn a lot by conversations my writing sparks with readers, where I often find my ideas were wrong or need revising. Once the comments are taken over by bots I’m not sure that will continue to work, at least until I get a comment-reading bot going.

A last bullish AI thought. Birthrates are plummeting. Perhaps artificial people are coming along just in time. If we view what’s happening as a further shift from numbers of children to quality of children, with more parental and educational investment in each one, we might get the environmental benefits of fewer people with the economic benefits of more people.

"Introspecting, am I really that much more than a large language model?" I heard Marc Andreesen make the same point sometime in the past couple of years. It has been one of the stories that stuck with *me*.

A related thought: We really only accumulate wisdom through experience. You try to communicate wisdom to kids, but it will never stick-- at best they will remember it after having had more experience. But a new AI can be endowed with the neural net type connections of a predecessor simply by copying them to the new AI (an exaggeration, but not too far off)-- effectively giving them wisdom without the need for costly and time-consuming experience. So consider the possibility that those who think AIs will need to be tempered by human wisdom, have it backwards: AIs may become a greater repository of wisdom than humans.

There is a Milton Friedman AI bot! https://friedman.ai/